onprem.ai for SMEs

Local AI Infrastructure for Secure and Controlled Applications

Ready to use immediately, cost-transparent and user-friendly. For direct deployment in the office or as an interface for your own AI projects with sensitive data. Developed in Switzerland by independent AI experts with years of practical experience in the business sector.

Ready to Go.

High-Performance AI Server Clusters – Preconfigured with APIs and Apps

For professional immediate deployment. Powerful hardware and seamlessly scalable software at datacenter level enable noticeably lower latency than cloud-based solutions – usable individually or combined as a cluster. Many apps and APIs that users know from the cloud are ready to be used.

No Blackbox.

Resource Management and Monitoring

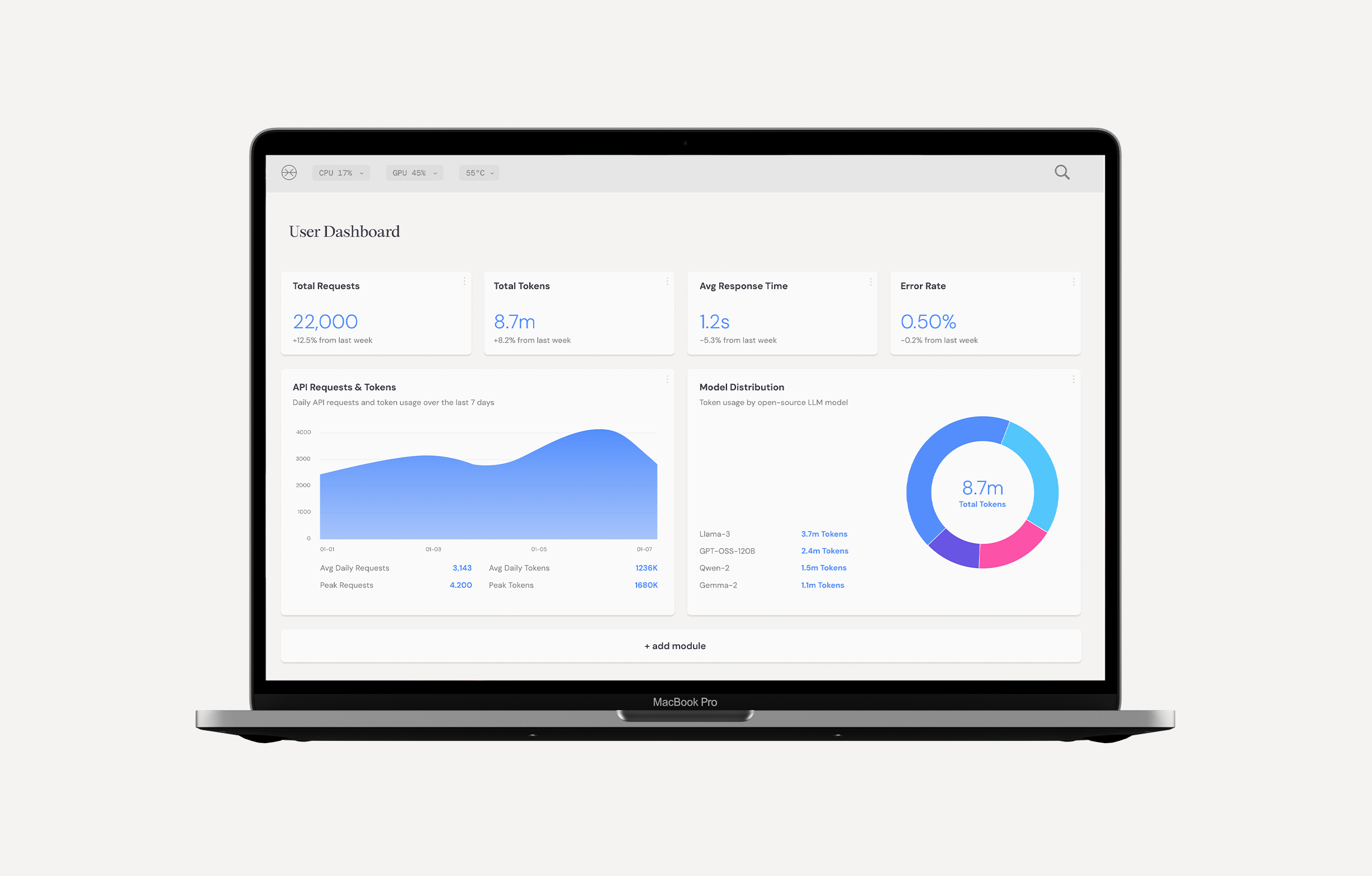

Simple, Central Interface

A unified console bundles projects, models, cluster control, and utilization in a clearly structured, user-friendly view.

Transparency and Security

Integrated monitoring with real-time metrics on utilization, combined with audit logs for traceable usage.

Intelligent Resource Distribution

Smart load balancing and policy-based compute control ensure that business-critical workloads run with priority, while less urgent tasks are automatically moved to free capacity.

Multi-Tenancy & Governance

Clear separation of tenants with their own quotas and isolated workspaces enables multiple business areas to operate securely on the same infrastructure.

Automatic Clusters

Thanks to auto-discovery, new AI servers are automatically detected and can be easily integrated into appropriate cluster or resource groups. This allows the infrastructure to grow dynamically.

Chat.

Your Partner for Instant Work

Instant Response

Instant responses on the newest language models – noticeably faster than GPT. Direct model access ensures maximum speed with full control, ideal for productive workflows and confidential content. Developed for reliable use in professional and performance-critical environments.

Versatile.

Integrations for Chat and Custom AI Applications

Workflow Automation

No Code / Low Code

Through a visual interface, business processes can be automated entirely without programming knowledge. By connecting data sources and work steps via drag-and-drop, reusable workflows are created that noticeably relieve routine tasks.

LLM APIs, MCPs

Efficient for Developers

Standardized interfaces allow for efficient integration of onprem AI into existing specialized applications. LLM APIs and Model Context Protocol (MCPs) are pre-installed on the onprem AI servers and immediately ready for use, with developer-friendly tools and monitoring.

Container, Own AIs

Complete Flexibility

Custom-trained AI models can be trained and operated on the onprem AI server in all common formats (GGUF, ONNX, PyTorch, TensorRT). In container format with GPU access (Kubernetes HEML, Docker Compose), even the most complex AIs for video, audio, or image can be installed.

Cost-Transparent.

Clear Cost Structure

Questions?

We're happy to help.

Our team is happy to personally support you with technical questions, offers, or individual requirements. We already answer many questions in our frequently asked questions – clear, compact, and practical.